This post is the foreword written by Brad Smith for Microsoft’s report Governing AI: A Blueprint for the Future. The first part of the report details five ways governments should consider policies, laws, and regulations around AI. The second part focuses on Microsoft’s internal commitment to ethical AI, showing how the company is both operationalizing and building a culture of responsible AI.

“Don’t ask what computers can do, ask what they should do.”

That is the title of the chapter on AI and ethics in a book I co-authored in 2019. At the time, we wrote that, “This may be one of the defining questions of our generation.” Four years later, the question has seized center stage not just in the world’s capitals, but around many dinner tables.

As people have used or heard about the power of OpenAI’s GPT-4 foundation model, they have often been surprised or even astounded. Many have been enthused or even excited. Some have been concerned or even frightened. What has become clear to almost everyone is something we noted four years ago – we are the first generation in the history of humanity to create machines that can make decisions that previously could only be made by people.

Countries around the world are asking common questions. How can we use this new technology to solve our problems? How do we avoid or manage new problems it might create? How do we control technology that is so powerful?

These questions call not only for broad and thoughtful conversation, but decisive and effective action. This paper offers some of our ideas and suggestions as a company.

These suggestions build on the lessons we’ve been learning based on the work we’ve been doing for several years. Microsoft CEO Satya Nadella set us on a clear course when he wrote in 2016 that, “Perhaps the most productive debate we can have isn’t one of good versus evil: The debate should be about the values instilled in the people and institutions creating this technology.”

Since that time, we’ve defined, published, and implemented ethical principles to guide our work. And we’ve built out constantly improving engineering and governance systems to put these principles into practice. Today, we have nearly 350 people working on responsible AI at Microsoft, helping us implement best practices for building safe, secure, and transparent AI systems designed to benefit society.

New opportunities to improve the human condition

The resulting advances in our approach have given us the capability and confidence to see ever-expanding ways for AI to improve people’s lives. We’ve seen AI help save individuals’ eyesight, make progress on new cures for cancer, generate new insights about proteins, and provide predictions to protect people from hazardous weather. Other innovations are fending off cyberattacks and helping to protect fundamental human rights, even in nations afflicted by foreign invasion or civil war.

Everyday activities will benefit as well. By acting as a copilot in people’s lives, the power of foundation models like GPT-4 is turning search into a more powerful tool for research and improving productivity for people at work. And, for any parent who has struggled to remember how to help their 13-year-old child through an algebra homework assignment, AI-based assistance is a helpful tutor.

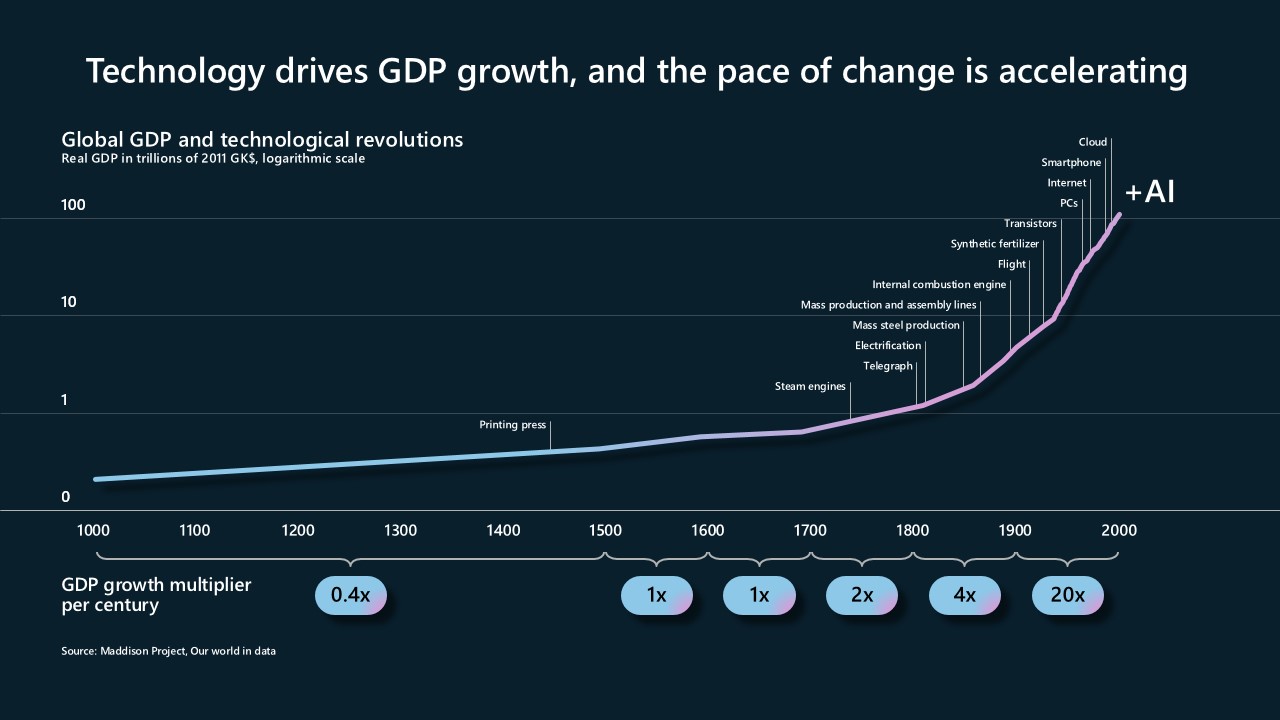

In so many ways, AI offers perhaps even more potential for the good of humanity than any invention that has preceded it. Since the invention of the printing press with movable type in the 1400s, human prosperity has been growing at an accelerating rate. Inventions like the steam engine, electricity, the automobile, the airplane, computing, and the internet have provided many of the building blocks for modern civilization. And, like the printing press itself, AI offers a new tool to genuinely help advance human learning and thought.

Guardrails for the future

Another conclusion is equally important: It’s not enough to focus only on the many opportunities to use AI to improve people’s lives. This is perhaps one of the most important lessons from the role of social media. Little more than a decade ago, technologists and political commentators alike gushed about the role of social media in spreading democracy during the Arab Spring. Yet, five years after that, we learned that social media, like so many other technologies before it, would become both a weapon and a tool – in this case aimed at democracy itself.

Today we are 10 years older and wiser, and we need to put that wisdom to work. We need to think early on and in a clear-eyed way about the problems that could lie ahead. As technology moves forward, it’s just as important to ensure proper control over AI as it is to pursue its benefits. We are committed and determined as a company to develop and deploy AI in a safe and responsible way. We also recognize, however, that the guardrails needed for AI require a broadly shared sense of responsibility and should not be left to technology companies alone.

When we at Microsoft adopted our six ethical principles for AI in 2018, we noted that one principle was the bedrock for everything else – accountability. This is the fundamental need: to ensure that machines remain subject to effective oversight by people, and the people who design and operate machines remain accountable to everyone else. In short, we must always ensure that AI remains under human control. This must be a first-order priority for technology companies and governments alike.

This connects directly with another essential concept. In a democratic society, one of our foundational principles is that no person is above the law. No government is above the law. No company is above the law, and no product or technology should be above the law. This leads to a critical conclusion: People who design and operate AI systems cannot be accountable unless their decisions and actions are subject to the rule of law.

In many ways, this is at the heart of the unfolding AI policy and regulatory debate. How do governments best ensure that AI is subject to the rule of law? In short, what form should new law, regulation, and policy take?

A five-point blueprint for the public governance of AI

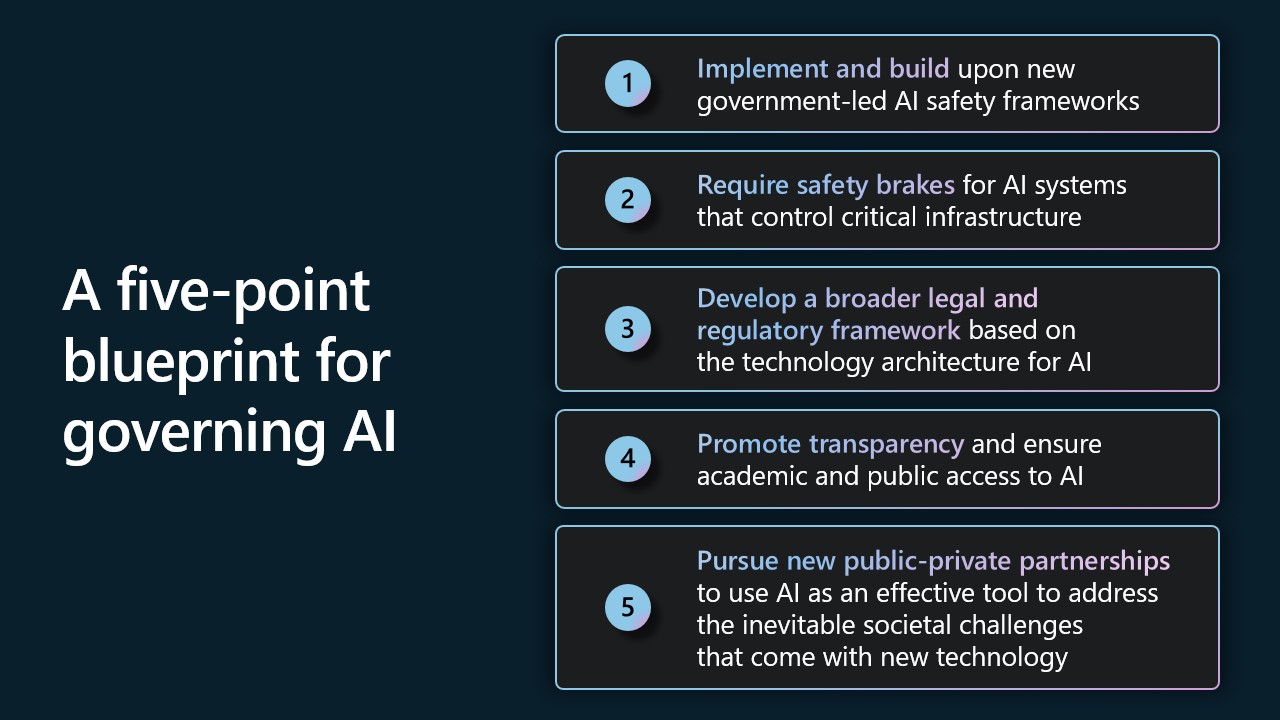

Section One of this paper offers a five-point blueprint to address several current and emerging AI issues through public policy, law, and regulation. We offer this recognizing that every part of this blueprint will benefit from broader discussion and require deeper development. But we hope this can contribute constructively to the work ahead.

First, implement and build upon new government-led AI safety frameworks. The best way to succeed is often to build on the successes and good ideas of others. Especially when one wants to move quickly. In this instance, there is an important opportunity to build on work completed just four months ago by the U.S. National Institute of Standards and Technology, or NIST. Part of the Department of Commerce, NIST has completed and launched a new AI Risk Management Framework.

We offer four concrete suggestions to implement and build upon this framework, including commitments Microsoft is making in response to a recent White House meeting with leading AI companies. We also believe the administration and other governments can accelerate momentum through procurement rules based on this framework.

Second, require effective safety brakes for AI systems that control critical infrastructure. In some quarters, thoughtful individuals increasingly are asking whether we can satisfactorily control AI as it becomes more powerful. Concerns are sometimes posed regarding AI control of critical infrastructure like the electrical grid, water system, and city traffic flows.

This is the right time to discuss this question. This blueprint proposes new safety requirements that, in effect, would create safety brakes for AI systems that control the operation of designated critical infrastructure. These fail-safe systems would be part of a comprehensive approach to system safety that would keep effective human oversight, resilience, and robustness top of mind. In spirit, they would be similar to the braking systems engineers have long built into other technologies such as elevators, school buses, and high-speed trains, to safely manage not just everyday scenarios, but emergencies as well.

In this approach, the government would define the class of high-risk AI systems that control critical infrastructure and warrant such safety measures as part of a comprehensive approach to system management. New laws would require operators of these systems to build safety brakes into high-risk AI systems by design. The government would then ensure that operators test high-risk systems regularly to ensure that the system safety measures are effective. And AI systems that control the operation of designated critical infrastructure would be deployed only in licensed AI datacenters that would ensure a second layer of protection through the ability to apply these safety brakes, thereby ensuring effective human control.

Third, develop a broad legal and regulatory framework based on the technology architecture for AI. We believe there will need to be a legal and regulatory architecture for AI that reflects the technology architecture for AI itself. In short, the law will need to place various regulatory responsibilities upon different actors based upon their role in managing different aspects of AI technology.

For this reason, this blueprint includes information about some of the critical pieces that go into building and using new generative AI models. Using this as context, it proposes that different laws place specific regulatory responsibilities on the organizations exercising certain responsibilities at three layers of the technology stack: the applications layer, the model layer, and the infrastructure layer.

This should first apply existing legal protections at the applications layer to the use of AI. This is the layer where the safety and rights of people will most be impacted, especially because the impact of AI can vary markedly in different technology scenarios. In many areas, we don’t need new laws and regulations. We instead need to apply and enforce existing laws and regulations, helping agencies and courts develop the expertise needed to adapt to new AI scenarios.

There will then be a need to develop new law and regulations for highly capable AI foundation models, best implemented by a new government agency. This will impact two layers of the technology stack. The first will require new regulations and licensing for these models themselves. And the second will involve obligations for the AI infrastructure operators on which these models are developed and deployed. The blueprint that follows offers suggested goals and approaches for each of these layers.

In doing so, this blueprint builds in part on a principle developed in recent decades in banking to protect against money laundering and criminal or terrorist use of financial services. The “Know Your Customer” – or KYC – principle requires that financial institutions verify customer identities, establish risk profiles, and monitor transactions to help detect suspicious activity. It would make sense to take this principle and apply a KY3C approach that creates in the AI context certain obligations to know one’s cloud, one’s customers, and one’s content.

In the first instance, the developers of designated, powerful AI models first “know the cloud” on which their models are developed and deployed. In addition, such as for scenarios that involve sensitive uses, the company that has a direct relationship with a customer – whether it be the model developer, application provider, or cloud operator on which the model is operating – should “know the customers” that are accessing it.

Also, the public should be empowered to “know the content” that AI is creating through the use of a label or other mark informing people when something like a video or audio file has been produced by an AI model rather than a human being. This labeling obligation should also protect the public from the alteration of original content and the creation of “deep fakes.” This will require the development of new laws, and there will be many important questions and details to address. But the health of democracy and future of civic discourse will benefit from thoughtful measures to deter the use of new technology to deceive or defraud the public.

Fourth, promote transparency and ensure academic and nonprofit access to AI. We believe a critical public goal is to advance transparency and broaden access to AI resources. While there are some important tensions between transparency and the need for security, there exist many opportunities to make AI systems more transparent in a responsible way. That’s why Microsoft is committing to an annual AI transparency report and other steps to expand transparency for our AI services.

We also believe it is critical to expand access to AI resources for academic research and the nonprofit community. Basic research, especially at universities, has been of fundamental importance to the economic and strategic success of the United States since the 1940s. But unless academic researchers can obtain access to substantially more computing resources, there is a real risk that scientific and technological inquiry will suffer, including relating to AI itself. Our blueprint calls for new steps, including steps we will take across Microsoft, to address these priorities.

Fifth, pursue new public-private partnerships to use AI as an effective tool to address the inevitable societal challenges that come with new technology. One lesson from recent years is what democratic societies can accomplish when they harness the power of technology and bring the public and private sectors together. It’s a lesson we need to build upon to address the impact of AI on society.

We will all benefit from a strong dose of clear-eyed optimism. AI is an extraordinary tool. But, like other technologies, it too can become a powerful weapon, and there will be some around the world who will seek to use it that way. But we should take some heart from the cyber front and the last year-and-a-half in the war in Ukraine. What we found is that when the public and private sectors work together, when like-minded allies come together, and when we develop technology and use it as a shield, it’s more powerful than any sword on the planet.

Important work is needed now to use AI to protect democracy and fundamental rights, provide broad access to the AI skills that will promote inclusive growth, and use the power of AI to advance the planet’s sustainability needs. Perhaps more than anything, a wave of new AI technology provides an occasion for thinking big and acting boldly. In each area, the key to success will be to develop concrete initiatives and bring governments, respected companies, and energetic NGOs together to advance them. We offer some initial ideas in this report, and we look forward to doing much more in the months and years ahead.

Governing AI within Microsoft

Ultimately, every organization that creates or uses advanced AI systems will need to develop and implement its own governance systems. Section Two of this paper describes the AI governance system within Microsoft – where we began, where we are today, and how we are moving into the future.

As this section recognizes, the development of a new governance system for new technology is a journey in and of itself. A decade ago, this field barely existed. Today, Microsoft has almost 350 employees specializing in it, and we are investing in our next fiscal year to grow this further.

As described in this section, over the past six years we have built out a more comprehensive AI governance structure and system across Microsoft. We didn’t start from scratch, borrowing instead from best practices for the protection of cybersecurity, privacy, and digital safety. This is all part of the company’s comprehensive enterprise risk management (ERM) system, which has become a critical part of the management of corporations and many other organizations in the world today.

When it comes to AI, we first developed ethical principles and then had to translate these into more specific corporate policies. We’re now on version 2 of the corporate standard that embodies these principles and defines more precise practices for our engineering teams to follow. We’ve implemented the standard through training, tooling, and testing systems that continue to mature rapidly. This is supported by additional governance processes that include monitoring, auditing, and compliance measures.

As with everything in life, one learns from experience. When it comes to AI governance, some of our most important learning has come from the detailed work required to review specific sensitive AI use cases. In 2019, we founded a sensitive use review program to subject our most sensitive and novel AI use cases to rigorous, specialized review that results in tailored guidance. Since that time, we have completed roughly 600 sensitive use case reviews. The pace of this activity has quickened to match the pace of AI advances, with almost 150 such reviews taking place in the 11 months.

All of this builds on the work we have done and will continue to do to advance responsible AI through company culture. That means hiring new and diverse talent to grow our responsible AI ecosystem and investing in the talent we already have at Microsoft to develop skills and empower them to think broadly about the potential impact of AI systems on individuals and society. It also means that much more than in the past, the frontier of technology requires a multidisciplinary approach that combines great engineers with talented professionals from across the liberal arts.

All this is offered in this paper in the spirit that we’re on a collective journey to forge a responsible future for artificial intelligence. We can all learn from each other. And no matter how good we may think something is today, we will all need to keep getting better.

As technological change accelerates, the work to govern AI responsibly must keep pace with it. With the right commitments and investments, we believe it can.